Accuracy in Automated Meeting Transcription: A Comparative Review for the UK Market

Introduction

Automated speech recognition (ASR) technologies (AI transcription) have become embedded in professional practice across the United Kingdom. From higher education and healthcare to boardrooms and government hearings, organisations increasingly rely on machine-generated transcripts for record-keeping, accessibility, and analysis. The proliferation of online meeting platforms such as Microsoft Teams and Zoom during the COVID-19 pandemic accelerated this reliance.

Yet accuracy remains the critical fault-line in adoption. Professionals require reliable transcripts that withstand scrutiny, particularly in regulated domains such as law, finance, and public administration. While vendors often promise near-perfect results, independent studies demonstrate time and again that these are more wishful thinking! The principal measure of transcription quality, Word Error Rate (WER), quantifies the proportion of substitutions, deletions, and insertions relative to a reference transcript. Lower WER equates to higher quality.

This article collates recent benchmarks of leading transcription tools—TurboScribe, OpenAI’s Whisper, Otter.ai, Zoom’s native transcription, and Microsoft Teams transcription—evaluating their reported WERs, limitations, and practical performance in meeting contexts characterised by crosstalk and accent variability. Particular attention is given to conditions likely to occur in the UK workplace, including overlapping speech and the presence of diverse regional and international Englishes.

Understanding Word Error Rate (WER)

WER has become the de facto metric for evaluating speech recognition systems.

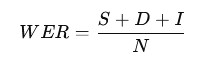

What is the formula for WER?

The WER rate is calculated as:

where S = substitutions, D = deletions, I = insertions, and N = total words in the reference.

A WER of 0% would indicate a perfect transcript, while 100% would indicate complete failure. In practice, contemporary systems claim single-digit WERs under ideal laboratory conditions. However, field performance in meetings—replete with poor microphones, background noise, crosstalk, and varied accents—produces far higher figures.

A WER under 10% is often described as “publishable” quality; 10–25% can be “usable with significant editing [NB if it helps University Transcriptions often find it faster to simply transcribe from scratch at these levels]”; and above 25% typically requires substantial human intervention and essentially a full rewrite. For professional UK contexts such as court reporting, academic research, study or clinical documentation in a health setting, anything above 5% may be unacceptable.

TurboScribe

TurboScribe is marketed as a premium transcription tool, claiming “99.8% accuracy”1. This equates to a WER of approximately 0.2%, which if consistently achieved would exceed even human stenographers. TurboScribe is powered by OpenAI’s Whisper model but layers proprietary processing on top.

Independent validation is lacking. Unlike Whisper or Otter, TurboScribe has not yet been included in peer-reviewed benchmarks. Given that Whisper itself records WERs between 8–15% under difficult conditions (see below), TurboScribe’s claim should be interpreted at best with caution, and at worst as a completely manufactured figure by an unaccountable internet company! Marketing figures may be based on controlled audio with single speakers and professional microphones—conditions far removed from a typical team meeting with overlapping speech and diverse accents.

Estimated performance in UK meetings: Based on its Whisper foundation and potential post-processing, TurboScribe may achieve 10–18% WER under crosstalk and accent variability. While better than many live transcription services, it is unlikely to reach the near-perfect levels suggested by marketing.

Whisper

OpenAI’s Whisper is an open-source ASR model trained on 680,000 hours of multilingual data. Its availability has spurred a wave of derivatives, from academic research to commercial products like TurboScribe.

Independent studies: A peer-reviewed investigation into transcription of psychiatric interviews compared Whisper with Amazon Transcribe and Zoom/Otter. Whisper recorded a median WER of 14.8% (interquartile range 11.1–19.7%)2. In this demanding domain, Whisper underperformed Amazon (8.9%) but outperformed Zoom/Otter (19.2%).

Vendor benchmarks: Some industry reports claim more optimistic figures. For example, Gladia (2024) reported a median WER of 8.06% for Whisper-v2 in their internal testing3. While promising, such data are not peer-reviewed and may not generalise.

Practical assessment for meetings: Whisper is robust to noise and accents, but struggles with crosstalk and domain-specific jargon. For post-processed meeting audio in the UK, one may realistically expect 10–20% WER. This is “usable with significant editing” but unlikely to satisfy contexts requiring verbatim records.

Otter.ai

Otter.ai is well known in the UK business and education sectors for real-time transcription, often integrated with Zoom.

Benchmark data: In the same psychiatric interview study cited above, Zoom’s Otter-powered live transcription yielded a median WER of 19.2% (IQR 15.1–24.8%)2. Another blog-based evaluation suggested a WER of 12.6% for business meetings under good audio conditions4. The discrepancy reflects context: specialised vocabularies and overlapping dialogue drive error rates higher, whereas clean corporate speech in a quiet setting produces more favourable outcomes.

UK meeting performance: In real-world British settings with accents ranging from Received Pronunciation to Scottish, Scouse, and beyond, Otter is likely to yield 12–20% WER. While serviceable for note-taking and summaries, transcripts will require extensive manual correction for minutes or official records.

Zoom Transcription

Zoom offers built-in transcription (often using Otter.ai for English), and more recently integrated its AI Companion.

Independent vendor study: A 2024 report by TestDevLab, commissioned by Zoom, found that Zoom achieved a WER of 7.40%, compared with 10.16% for Webex and 11.54% for Microsoft Teams5. While encouraging, the vendor-commissioned nature of this research warrants caution. Results may have been obtained under optimal audio conditions.

Peer-reviewed study: In the psychiatric interview comparison, Zoom/Otter again scored 19.2% WER, confirming that performance degrades in more naturalistic and demanding speech settings2.

UK meeting performance: Post-processed Zoom transcripts in real-world conditions are likely to fall within the 12–25% WER range. Where audio is clean and speakers cooperative, performance approaches 10%. In dynamic, multi-accent discussions with interruptions, expect closer to 20%.

Microsoft Teams Transcription

Microsoft Teams dominates the UK market.

Independent benchmarks: The TestDevLab report cited above recorded a WER of 11.54% for Teams compared with 7.40% for Zoom5. Other industry blogs suggest practical accuracy rates of 80–90%, i.e. WERs of 10–20%6.

Limitations: Microsoft itself acknowledges that live transcription “will not be perfect”7. Accuracy is particularly challenged by accent variability and overlapping dialogue—both common in UK workplaces.

UK meeting performance: In post-processing mode, Teams transcripts are likely to range 12–25% WER, broadly comparable to Zoom, with occasional outperformance or underperformance depending on specific meeting dynamics.

Comparative Summary

The following table synthesises reported and estimated performance:

| Tool | Peer-reviewed WER | Vendor/Blog WER | Estimated WER in UK Meetings (post-processing, with crosstalk + accents) |

|---|---|---|---|

| TurboScribe | N/A | Claim: 0.2% (marketing blurb) | 8–18% |

| Whisper | 14.8% (psychiatric interviews)2 | 8.06% (vendor)3 | 10–20% |

| Otter.ai | 19.2% (psychiatric interviews)2 | 12.6% (blog)4 | 12–20% |

| Zoom | 19.2% (psychiatric interviews)2 | 7.40% (vendor)5 | 12–25% |

| Microsoft Teams | N/A | 11.54% (vendor)5 | 12–25% |

Key Challenges: Crosstalk and Accents

Crosstalk

Crosstalk—the simultaneous speech of multiple participants—remains the Achilles’ heel of ASR. Even state-of-the-art systems trained on vast datasets falter when confronted with overlapping speech. In meetings where interruptions and lively debates are common, this issue is especially pronounced.

Accents

The United Kingdom is home to extraordinary dialectal diversity, from the Geordie to the Glaswegian and the Scouse. Moreover, London and other metropolitan areas bring international English into the professional sphere as well. ASR systems trained primarily on American English often misrecognise these varieties, inflating WER.

Whisper, with its multilingual training corpus, has somewhat better resilience to accent diversity, but no system currently achieves parity with human transcribers across the full range of UK and global English accents.

Practical Implications for UK Organisations

- Accessibility and Equality: Under the Equality Act 2010, UK employers and educational institutions must ensure accessibility for disabled individuals, including those who rely on transcripts. Inaccurate automated transcription risks non-compliance.

- Legal and Regulatory Standards: Sectors such as law, healthcare, and finance require verbatim records. A WER above 10% may be unacceptable, necessitating human oversight.

- Cost–Benefit Analysis: While automated tools reduce costs compared to human transcription, post-meeting editing and verification introduce hidden labour costs. UK organisations should budget for hybrid workflows where machine transcripts are going to need to be human-corrected.

- Market Opportunities: There is strong potential for UK-specific ASR development. Tailoring models to regional dialects could close the accuracy gap. Companies such as TP Transcription in the UK already offer human-checked transcripts to address these shortcomings.

Recommendations

- Hybrid Approach: Use ASR for draft transcripts, followed by human editing. This balances efficiency with accuracy.

- Vendor Transparency: Push suppliers to publish independent benchmarks on UK audio datasets.

- Training Data Expansion: Encourage the inclusion of UK regional accents for LLM.

- Post-Processing Tools: Leverage diarisation, noise reduction, and accent-adaptation modules to mitigate weaknesses.

Conclusion and Practical Next Steps

Despite bold marketing claims, no current ASR system guarantees near-perfect accuracy in UK meeting conditions. TurboScribe’s advertised 99.8% accuracy remains unsupported by independent benchmarks. Whisper offers strong open-source performance, with WERs around 10–20% under challenging conditions. Otter.ai, Zoom, and Microsoft Teams transcription deliver comparable results, typically between 12–25% in real-world meetings with crosstalk and accents.

For British organisations, the pragmatic path forward lies in hybrid workflows: leveraging ASR to reduce costs and turnaround time, while retaining human editors for accuracy. As the UK continues to diversify linguistically and technologically, robust transcription remains not only a matter of convenience but one of accessibility, legal compliance, and inclusivity.

Human-Verified Accuracy for the UK

While automated transcription can provide a useful draft, the evidence shows that AI alone cannot yet guarantee the precision demanded by UK academia, business, or regulated sectors. Word Error Rates of 5–25% in real-world meetings mean that sensitive discussions or research interviews require human verification to meet compliance and accessibility standards.

For organisations seeking dependable accuracy:

- Academic research transcription: University Transcriptions specialises in human-checked services for universities, researchers, and students across the UK. Their team ensures that disciplinary jargon, multiple accents, and complex recordings are transcribed with precision.

- Professional business transcription: TP Transcription provides professional, confidential transcription for corporate, legal, medical, and public-sector clients. Their hybrid approach—combining technology with expert editors—delivers transcripts fit for regulatory and operational requirements.

By pairing automated tools for speed with UK-based human editors for accuracy, organisations can secure the best of both worlds: efficiency without compromising reliability – for accurate results, hybrid transcription may be the future.

Footnotes

- TurboScribe, Official website, 2025. Available at: https://turboscribe.ai

- Patel, R. et al., “Compliant Transcription Services for Virtual Psychiatric Interviews,” JMIR Mental Health, 2023. Available at: https://pmc.ncbi.nlm.nih.gov/articles/PMC10646674/

- Gladia, “Benchmarks: Whisper-v2 Accuracy,” 2024.

- SuperAGI, “Transcription Showdown: Comparing the Accuracy and Efficiency of Top AI Meeting Transcription Tools,” 2024.

- TestDevLab, “Zoom AI Companion Transcription Benchmark,” 2024. Reported in TechRadar.

- Tactiq, “Microsoft Teams AI Transcription Accuracy,” 2024.

- University of Wisconsin–Madison, Microsoft 365 Live Transcription Documentation, 2023.